The trust equation

Why credibility and transparency matter, and how brands build it

Hibato Ben Ahmed

Strategy Specialist, UX

Preface

Trust has become one of the biggest challenges for brands today. Across industries such as finance, technology, and cybersecurity, credibility is eroding as consumers demand real proof of responsibility, transparency, and shared values. In a fragmented information landscape where authority is easily challenged and AI embedded in everyday life, earning trust requires more than promises and good intentions.

Drawing on insights from the Financial Times, Edelman, PwC, and McKinsey, this article explores why trust underpins every part of business and how organizations can close the gap between what people expect and what they actually experience.

Introduction

The past few years have brought a growing sense of uncertainty. From the pandemic to climate disruption and political division, people are questioning whether the systems that shape their lives still serve them. Businesses are also facing rising skepticism.

A 2024 report by PwC found a striking gap between perception and reality: while 90% of executives believe consumers trust their brand, only 30% of the public agree. A 2025 report by the Financial Times (FT) and the Institute of Practitioners in Advertising (IPA) found that the biggest trust gaps are in finance, insurance, technology, and cybersecurity, all of which demand a higher degree of transparency and accountability that many consumers feel they're not getting. As AI reshapes everyday life, brands risk deepening this divide if they cannot show that they use data responsibly.

There are wider risks at play, too. When trust is lacking, credible information can lose its impact, particularly when the intent behind it is unclear or audiences struggle to engage with how it’s communicated. This is especially important for organizations that depend on scientific evidence to inform public understanding and guide decision-making. Without trust, this important work loses traction. And in a fragmented information environment where authority is more easily challenged and attention harder to hold, regaining credibility is more difficult than ever.

How might we build and sustain trust in a world where confidence is in short supply and where the consequences of losing it are only becoming more visible?

Trust and science

Scientific authority is often assumed to speak for itself, but research suggests that trust depends less on credentials than it does on connection. According to Pew Research Center, only 23% of Americans in 2023 had a great deal of confidence in scientists to act in the public interest, down from 35% in 2019, while those expressing little or no confidence doubled.

While some have framed this as a crisis of confidence, international research points to a more nuanced picture. A 2025 study led by the University of Zurich and ETH Zurich found that, although scientists are viewed as honest and competent, many feel that science is too removed from everyday life. 83% of 72,000 respondents said scientists need to communicate their work more clearly. Visibility and relatability are now essential to building trust in a world where expertise alone no longer guarantees credibility. For science, that means aligning communication with shared values and making the “why” behind research easier to understand.

When Whitehead Institute wanted to rebrand, they had already identified this challenge. Their communications were historically geared toward peers, emphasizing institutional legacy over real-world relevance. The rebrand shifted this perspective, reframing Whitehead’s online presence to reflect a community of researchers working in service of human health and collective knowledge. Through a new visual identity and a more user-focused website, the institute made its science more accessible and its researchers more relatable—curious, engaged individuals working to advance health and society.

Other knowledge institutions have gone through similar shifts. EBRAINS, the digital research platform for neuroscience, was originally built as a collaboration space for experts, but its structure made it difficult for others to access and engage with the work. They reshaped the platform around discoverability and participation, introducing a more accessible design system that enables neuroscientists to collaborate and contribute more openly. By making tools and data easier to navigate—and by tying them directly to research goals—the platform shows how transparency can strengthen trust even in highly specialized fields.

Demystifying the language of expertise

The need to close the gap between domain expertise and public understanding reflects broader shifts in consumer expectations.

A 2025 Edelman survey of over 15,000 people found that 64% of consumers choose brands that share their values, up 12 points in the last five years. But when credible information is hard to understand or buried in formats that don’t match how people actually consume content, it loses its impact. And when that happens, people turn to whatever is easier to access and digest, even if it’s less reliable.

These challenges have pushed many organizations to reconsider how their work reaches the public. The International Energy Agency (IEA) recognized that its position as a technical authority was no longer aligned with changing consumer habits. Amid growing urgency around clean energy, IEA needed to move beyond the detailed analysis it was known for and become a distributor of timely insights that people could quickly grasp and act on. By revamping both its brand and its publishing infrastructure, the IEA transformed a static, report-driven model into a dynamic platform that surfaces real-time data in ways that are easier to interpret and apply — whether by analysts, policymakers, or the broader public. This shift allowed the agency to reach wider audiences while preserving the depth and authority of its research.

The role of AI in trust

Where scientific institutions have learned the importance of presence in building public trust, tech companies are navigating similar demands at a faster pace and, often, in higher-stakes environments. AI tools are becoming part of everyday life for both consumers and businesses, yet adoption is moving faster than most people’s understanding of how these systems work, what data they rely on, and who is responsible for their outputs.

At the same time AI is sparking important ethics and security discussions, business leaders are grappling with how much confidence to place in AI tools amid an ongoing race to the top. Research by the IPA and the Financial Times shows that fewer than one in ten decision-makers fully trust generative AI, even as most see it as essential to remain competitive.

For consumers, the main concern is transparency and control over how AI is used. PwC found that 57% of people are wary of how their personal data is handled, especially by automated systems, while a McKinsey report revealed that 59% believe brands put profit ahead of data protection. Most consumers are not interested in the technical details of AI — what matters is whether companies show they are acting responsibly. Making those efforts visible is crucial, especially when the technology itself is difficult to understand.

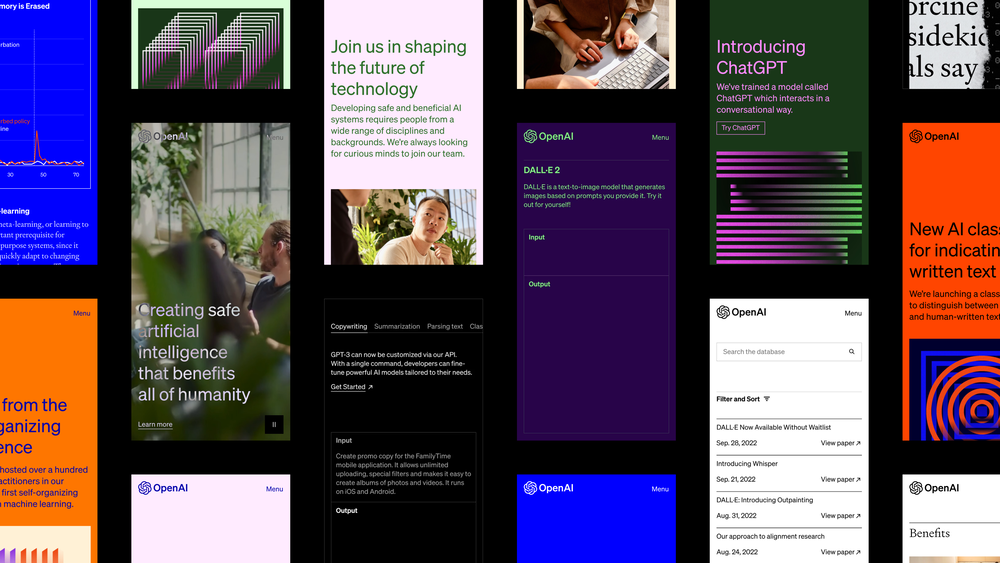

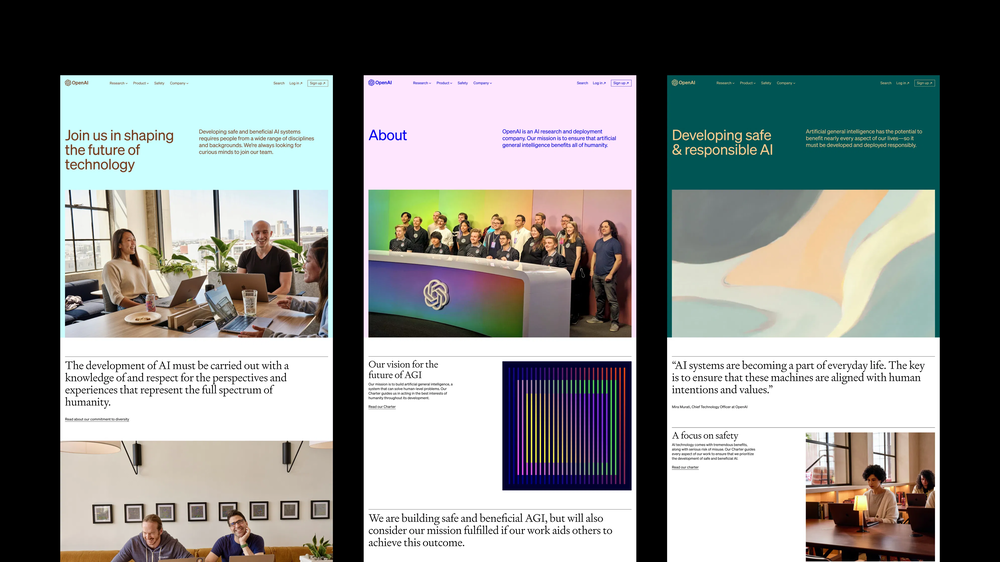

When OpenAI approached AREA 17, it was at a pivotal moment. Having operated primarily as a research lab, it was preparing to launch its first widely available commercial product. The redesign we embarked on together focused on creating a platform that could support this transition, while clearly communicating OpenAI's mission of making safe and ethical AI accessible to everyone. It also aimed to humanize a company increasingly seen as symbolic of AI’s rapid expansion, calling for a more human-centred digital identity.

The rebrand introduced a personal tone, supported by candid photography and a warmer palette to bridge the gap between technical depth and human collaboration. This made OpenAI’s work feel less like a scary "black box" and more like a human effort to advance understanding and access through technology.

The same principle applies to other tech companies seeking trust. To earn confidence from audiences concerned about data use, brands must be transparent about who they are, what they stand for, and how their values are expressed through everyday actions.

Building trust as a strategic priority

If trust is to be earned rather than assumed, then business leaders must treat it as more than a surface-level branding exercise. The evidence clearly shows that trust is both ethically important and commercially significant. Research from PwC links trust directly to profitability and market resilience, while McKinsey and Edelman both highlight the growing cost of failing to meet expectations around privacy, transparency, and responsible AI use.

To close the gap between intention and perception, businesses need to identify better ways to communicate their values and create more meaningful ways to understand and respond to public concerns. Whitehead Institute, IEA, OpenAI, and EBRAINS all serve as case studies for organizations that have effectively aligned their public presence with their purpose — connecting what they say with how they show up in the world and treating transparency and accountability as central to how trust is built.

Conclusion

The expectation that companies act with responsibility and transparency isn’t new. What has changed is the urgency.

We’re operating in an increasingly digital, increasingly fragmented environment where visibility comes easily, but credibility is harder to earn. As AI, automation, and other data-driven services become more visible to the public, people still look for signs that a human is accountable on the other side. When that signal is missing, confidence begins to slip. Trust is foundational. It shapes how companies move, build, and respond. When trust is strong, organizations have room to innovate and adapt to changing consumer preferences and market conditions. That kind of trust takes time. It can’t be forced or faked, but when it’s there, it shows.